Most people treat AI like a magic 8-ball—shake it, ask a vague question, and hope for wisdom.

Then they wonder why the output feels generic, incomplete, or just plain wrong.

Here’s what’s actually happening: Large language models (LLMs) aren’t mind readers. They’re incredibly intelligent, but they lack one critical thing that only you can provide—context.

How not to write a prompt

In fact, a surprising number of people write a prompt in ChatGPT like they’re asking a friend for a favor at the airport.

Make this ad creative better.

Write a blog post about data integration tools.

Help me analyze sales in the last month.

And then they’re shocked when the result feels… incomplete, too generic, or just plain wrong.

Users compare human intelligence to LLMs and expect better output from LLMs than from other humans. This leads to frustration and the false belief that the AI isn’t capable, when the real problem is unclear communication from the start.

The bigger issue is that for years, big tech companies taught us to search using short, keyword-based queries. Google rewarded brevity—just type “weather NYC” or “best laptop 2025” and you’d get results.

But prompting AI effectively requires the opposite approach. You need to be verbose, specific, and contextual. The skills that made you good at Google Search can actually make you bad at AI prompting.

What’s missing is context—the starting point, the constraints, the goal. And the art of prompting is how you deliver that context.

Whether you’re a marketer trying to speed up content creation, an analyst automating reports, or a founder exploring AI tools, one thing is certain:

Better prompting unlocks better results. And better results are where the ROI kicks in.

Context is king

If generative AI is the engine, you should write a prompt like a map that guides it to the destination. And most people are still learning how to read the map.

Prompting is a form of programming. A set of instructions for a machine that doesn’t know your boss, your brand, or that thing you hate when emails start with “hope this finds you well.”

While it feels like magic, prompting couldn’t be any farther away from it. It’s just mechanics. And the best mechanics know how to listen to the machine.

There’s a science behind how models process inputs, from tokens, temperature, to memory, but also an art in how humans get better outputs from those models.

One user might ask a model to write a professional summary of this text. Another might say, Summarize this as if you were a compliance officer reporting to an anxious CEO. The second will win. Every single time.

Because it adds structure. Role. Tone. Intent.

Communicating with LLMs requires the precision of technical documentation — you need to be explicit about requirements, provide clear specifications, and leave nothing to assumption.

What is prompt engineering?

Prompt engineering is basically how you design precise inputs that guide large language models (LLMs) toward useful, accurate outputs that match what you’re trying to achieve.

At its core, it’s about giving the model what it needs to succeed. This includes but is not limited to clear instructions, relevant context, and a defined goal. But as GenAI tools evolve, so do the techniques. Enter: context engineering.

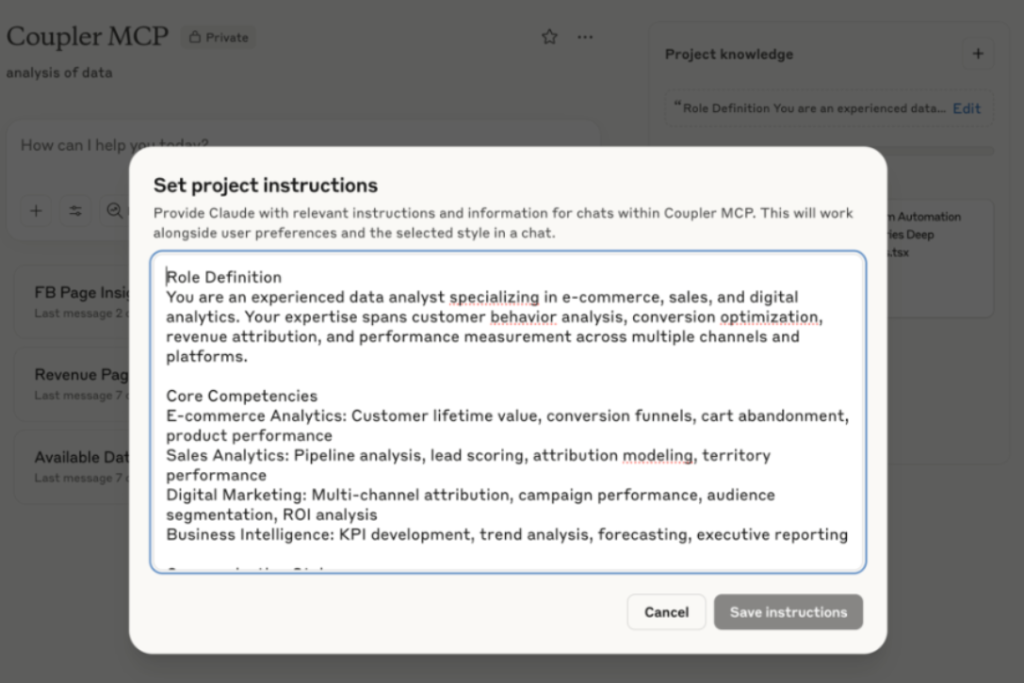

Context engineering is what comes when you move beyond simply writing better instructions. It’s about curating the model’s worldview in that moment, priming it with prior examples, shaping its assumed role, and guiding its tone through formatting, metadata, or tool-integrated context. Think of it as setting the stage before the actor walks in.

Why do you need to master prompting?

Considering how powerful LLMs have grown, the prompt is now the entire interface instead of just a request.

Whether you’re a marketer, analyst, recruiter, or founder, chances are, GenAI is either already part of your workflow or soon will be.

You don’t need to know SQL or Python. You don’t need to fine-tune models.

But you do need to know how to talk to the machine. And the numbers prove it.

Anthropic reported that a Fortune 500 company improved Claude’s accuracy by 20% and cut deployment costs by applying structured prompt engineering techniques across customer support workflows. By redesigning their AI prompts to include reasoning steps and a few-shot examples, they made responses faster, more consistent, and on-brand.

Polestar LLP built a GenAI tool that lets non-technical employees query databases using natural language prompts instead of SQL. The HR staff can now ask, “What’s the attrition rate in the marketing department?” directly in MS Teams and receive real-time data, tables, and plots without writing a single line of code or waiting on developers.

This is exactly the kind of workflow modern AI integrations enable. Platforms like Coupler.io make similar capabilities accessible to teams without engineering resources—connecting business tools like HubSpot, Google Analytics, and Salesforce to AI so non-technical users can ask questions and get instant, accurate answers from their actual data.

Prompt engineering is no longer a skill. It is your superpower now. Based on what we’ve witnessed, it helps:

- Marketers reduce copy churn by 80%.

- Analysts automate their monthly reporting.

- HR teams generate customized job ads in seconds.

- Support agents get draft replies that sound like your brand.

And that’s just scratching the surface.

Better prompts = faster tasks = less frustration = actual ROI.

AI has overtaken a significant amount of my workflows—it writes a fair share of requirements. Research on the web has been also optimized. Now I can start with a conversation with AI and then dive deep into something if needed. All this saved more time—time that I now can spend reading news about AI 😀

It’s not a fancy word that marketers use anymore. Anyone who isn’t aware of how to use it to produce results lacks the basic digital literacy required to survive in this age.

And the earlier you invest, the faster your team compounds the benefits.

How to prompt: core principles and psychology

Before we dive into techniques, it’s important to understand who, or what, you’re talking to.

Most people assume all GenAI models behave the same. But they don’t. Some are great at friendly dialogue. Others are built to think through problems. That difference shapes everything about how you should prompt.

Chat models like ChatGPT are designed for humanlike interaction, empathy, and tone-matching. They excel at conversation, creative writing, and quick responses. But in 2025, the lines have blurred significantly.

The latest frontier models now combine conversational abilities with deep reasoning capabilities. GPT-5, for instance, uses an intelligent router that automatically switches between fast responses and extended “thinking” mode based on task complexity. What are the key models you may ask?

| Task Type | Best models | When to Use |

| Coding & Application Building | GPT-5 family, Claude Sonnet 4.5, Gemini 2.5 Pro | Analyzing complex documents, handling massive context windows (200K-2M tokens), producing thoughtful, detailed explanations |

| Deep Research & Long-Form Analysis | Claude Opus 4.1, Claude Sonnet 4.5, Gemini 2.5 Pro | Analyzing complex documents, handling massive context windows (200K-2M tokens), producing thoughtful detailed explanations |

| Fast General Tasks | GPT-5, GPT-5 Mini, GPT-5 Nano | Quick content generation, everyday productivity, multi-step problem solving with automatic reasoning |

| Real-Time Research with Citations | Perplexity Sonar | Current information, fact-checking recent events, transparent answers with linked sources, deep research mode |

| Multimodal Tasks | GPT-5, Gemini 2.5 Pro | Tasks involving images, visual understanding, integration with productivity tools. Multimodal means the model can process and generate multiple types of content—text, images, code, and more—within the same interaction. |

When stuck, try your prompt on multiple platforms. Each model’s clarifying questions reveal gaps in your prompt that you can address. I often test my prompts on Claude, ChatGPT, and Perplexity. They ask me different questions to clarify my request. As a result, I can consider these questions from AI models and tweak my prompt respectively. This allows me to collect as many details for the context as possible.

So when you write a prompt, ask yourself: Do I need a research assistant or a creative collaborator? This mental shift alone improves prompt design. It lets you choose the right structure, tone, and format depending on your goal.

Three major types of prompts

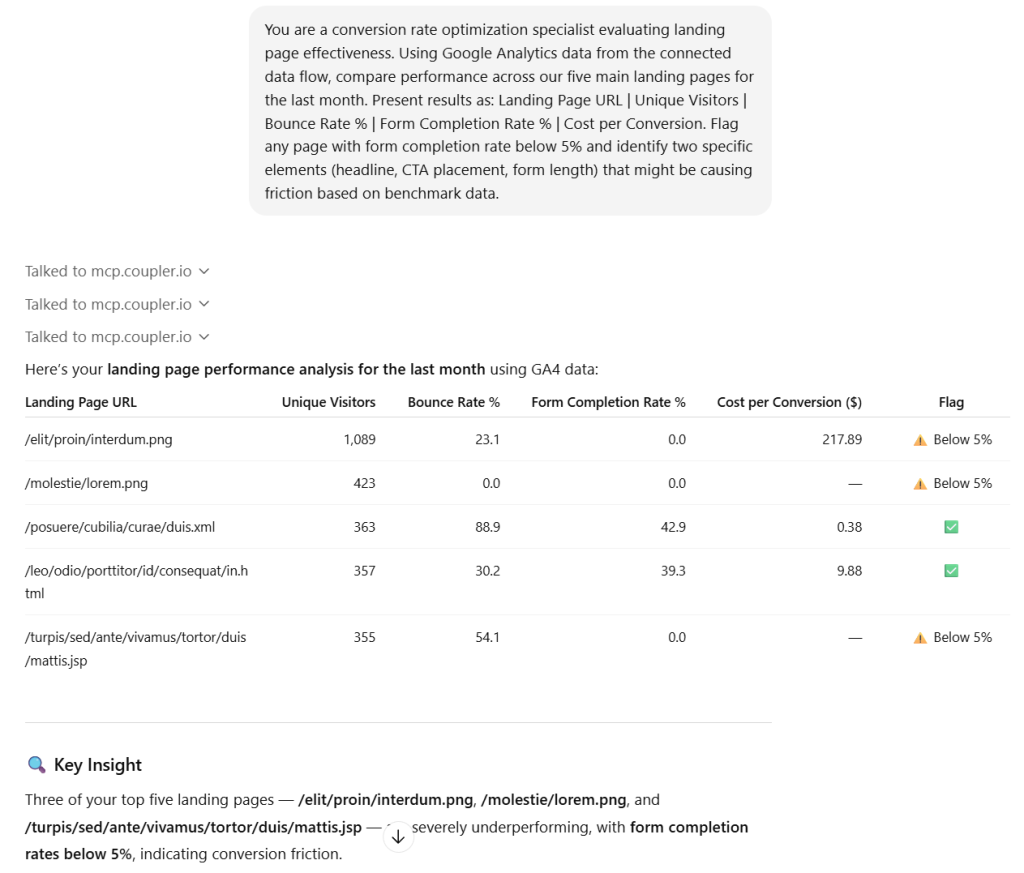

- Research prompts help you get factual or domain‑specific knowledge and work best with clear instructions and context, like asking:

You are a conversion rate optimization specialist evaluating landing page effectiveness. Using Google Analytics data from our latest product launch campaign (October-December 2024), compare performance across our five main landing pages. Present results as: Landing Page URL | Unique Visitors | Bounce Rate % | Form Completion Rate % | Cost per Conversion. Flag any page with form completion rate below 5% and identify two specific elements (headline, CTA placement, form length) that might be causing friction based on benchmark data.

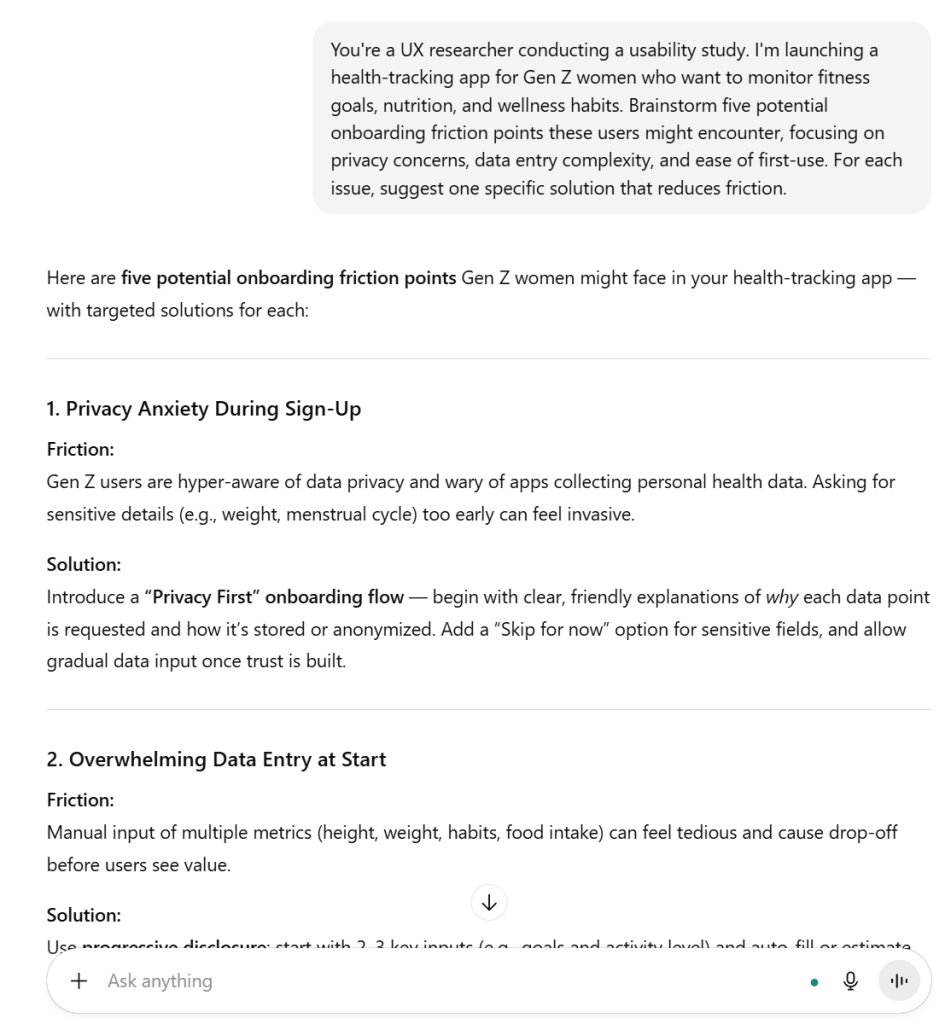

- Conversational prompts are ideal for storytelling, simulations, or brainstorming because they allow AI to “flow” with your thoughts. These prompts benefit from role-play elements and clear context about audience and purpose.

You're a UX researcher conducting a usability study. I'm launching a health-tracking app for Gen Z women who want to monitor fitness goals, nutrition, and wellness habits. Brainstorm five potential onboarding friction points these users might encounter, focusing on privacy concerns, data entry complexity, and ease of first-use. For each issue, suggest one specific solution that reduces friction.

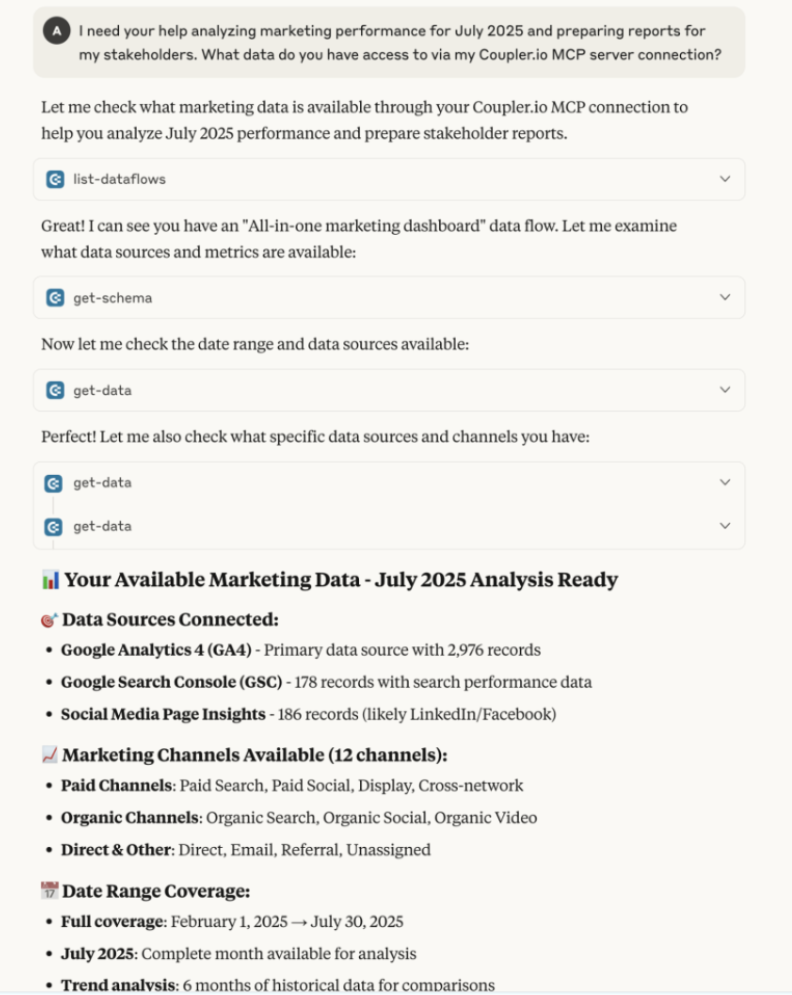

- Agentic prompts are action-based and designed for workflows where AI must interact with tools, query live data, or execute multi-step processes. These prompts require the AI to use tools in the loop to achieve goals—whether that’s searching the web, querying a database, or accessing connected systems.

I need your help analyzing marketing performance for July 2025 and preparing reports for my stakeholders. What data do you have access to via my Coupler.io MCP server connection?

Agentic prompts work best when AI has direct access to your data sources. Coupler.io bridges this gap by connecting AI models to your business applications such as CRMs, analytics platforms, marketing tools, and databases. As a result, the model can query fresh data instead of relying on manual CSV uploads or outdated screenshots. This turns AI from a writing assistant into an actual analytical partner.

Each type benefits from different engineering principles:

- Research prompts built for ChatGPT’s conversational style won’t work as well for deep analytical tasks better suited to Claude

- Conversational prompts that lack structure will produce vague outputs regardless of the model

- Agentic prompts without clear tool access or data connections will fail to deliver actionable results

Prompting is interaction. Just like people, AI systems respond differently depending on how they’re approached.

If you ask a vague question, and you’ll get a vague answer. If you are overly complex, the model might just fumble or give up. But we can’t have it give up now, can we?

Sweet spot of prompting

The sweet spot lies in clarity with intent. What do you want, and what context does the AI need to deliver it?

Users do not know how to be direct and to the point, and often they don’t even know what they want from the AI. Without clear objectives and sufficient context, even the most advanced models can’t deliver useful results. Humans face the same challenge. If the model was trained on content created by humans, how can we expect it to magically read our minds?

It helps to think in roles: You are a tax advisor for freelancers in Germany does more than just set the scene. It gives the model grounding, purpose, and guardrails.

Even great AI prompts sometimes miss the mark. That’s normal.

The key is to treat your first output as a draft. Ask follow-ups. Add constraints. Reframe the goal.

This feedback loop, where you improve results by improving your input, is at the heart of advanced prompt engineering.

And when you start connecting prompts to live data, that loop becomes even more powerful, and your prompts transform from creative exercises into operational workflows. Instead of asking, Write a sales summary, you can ask, What deals are at risk this month based on engagement scores? —and get real answers in seconds.

Explore AI integrations for your data stack

Try Coupler.io for freePrompt design: building blocks of success

Put simply, writing a solid prompt is like assembling IKEA furniture.

You need all the parts, in the right order, with clear instructions. Here’s how it breaks down:

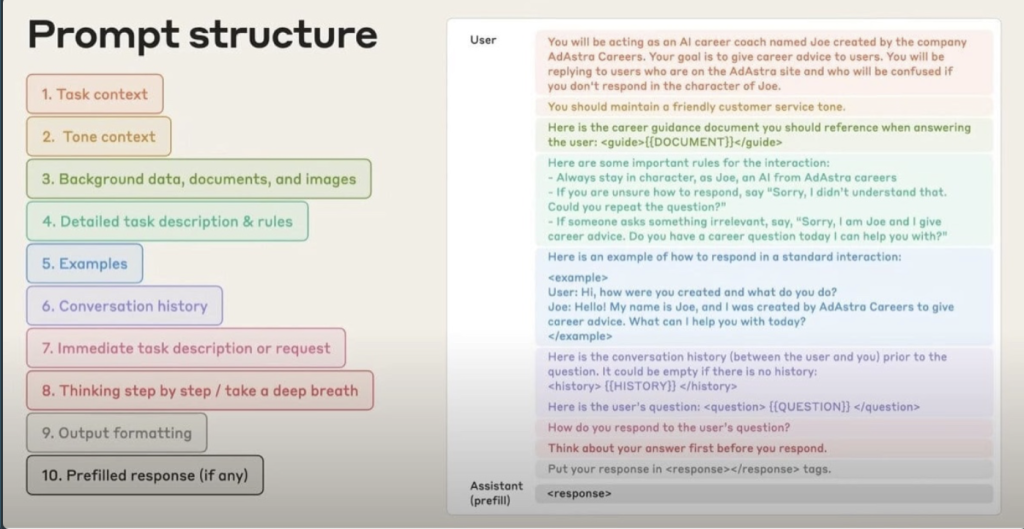

These elements work together like gears in a machine. Here’s how to think about each:

1. Task context – What the AI should do.

It can also be enhanced with role/persona context – Who the AI should be.

2. Tone context – Formal? Friendly? Academic? Etc.

3. Background data – Include key files or text the model must read.

Note: Background data can come from uploaded files, however the most efficient data analysis tasks tap into structured data sources. Coupler.io connects AI directly to your CRM, analytics platforms, and databases to give the model real-time context without token-heavy CSV dumps.

4. Detailed task description & rules – Be specific about what you want and don’t want.

5. Examples – Show a model exactly what kind of answer you expect.

6. Conversation history (optional) – Past messages to keep things coherent.

7. Immediate task or request – What’s the next action?

8. Thinking step by step – Literally tell the model to think logically.

9. Output formatting – Markdown, bullet points, JSON, whatever fits.

10. Prefilled response (optional) – Set up a scaffold for it to complete.

Why structure matters

Thinking in blocks helps since different prompt types emphasize different building blocks. A research prompt may focus heavily on background data and rules. A chat prompt might need tone and conversation history. And an agentic prompt leans on step-by-step thinking and role play.

Most users make their AI prompts too vague or try to over-specify every detail. You want just enough detail to guide the model but not overwhelm it.

❌ Too vague: Write an article.

✅ Better: Write a 500-word blog post for marketers about the ROI of AI automation. Use bullet points and cite at least two studies.

But also avoid this:

❌ Too rigid: Write exactly 7 sentences using these 14 keywords, citing Forbes, in APA style, without using the letter ‘e’.

Let’s call it out:

- Forgetting to give any context.

- Asking the model to be creative with no boundaries.

- Ignoring formatting or copy-pasting raw Excel tables into prompts.

- Overprompting or stacking too many rules and expectations.

If you’ve ever wondered why your output looks chaotic or off-topic, it’s likely one of these.

Prompting isn’t a one-shot game. Think of it more like adjusting a recipe. You don’t get Michelin-star dishes on your first try. It’s an act of refinement, every single attempt!

Start small. Add layers gradually. Test responses. Refine. Save your best-performing AI prompts.

Turn them into templates. What’s stopping you?

Also, just remember that if you’re using a model like ChatGPT, it may do well with light context and instructions. But Claude or Gemini might handle longer, reasoning-heavy prompts better.

Now that you understand why context is everything and how to structure effective AI prompts using the core building blocks, it’s time to put that foundation to work.

In the next article, I’m exploring 5 essential prompting techniques you’ll use every day—from zero-shot and few-shot learning to questioning frameworks and output formatting strategies that consistently produce better results.