This is the second episode in our series on the art of prompting. In the first one, How to Write a Prompt, I’ve explained why context is everything and how to structure effective prompts using the core building blocks. Now it’s time to put that foundation to work.

Great prompting is about the method behind the words. And a solid method starts with understanding how models learn and respond.

This article focuses on five practical techniques you’ll use every day—whether you’re summarizing documents, brainstorming ideas, or automating workflows. These aren’t theoretical concepts; they’re battle-tested methods that consistently produce better outputs.

Let’s view the five foundational techniques you’ll use again and again.

Direct instruction vs. example-based learning

Few-shot and zero-shot approaches are foundational prompting strategies:

Zero-shot prompting works by giving the model clear instructions without any prior examples. On the other hand, few-shot prompting gives the model a few prior examples to learn from. This is useful when the model isn’t initially giving you the tone and formatting you want or if your output must follow a specific structure or style.

Direct instruction (Zero-shot prompting)

As the name suggests, zero-shot prompting means the model does exactly what you tell it to—without seeing any examples first. This is useful for straightforward tasks where the instructions are clear and the output format is simple.

Modern LLMs like GPT-5 and Claude Sonnet 4.5 are trained on billions of instructions. So if your task is simple and low-context (e.g., summarize, translate, extract), zero-shot often nails it. It’s fast, clean, and works well for quick, one-off requests.

The best use cases are:

- Quick summaries from meeting transcripts

- Translating text into other languages

- Extracting key points from emails or reviews

Let’s look at an example:

Summarize the following definition in 3 bullet points in a casual tone and grade 4 reading level.

You want to make your ask concrete. Use numbers (e.g., in 3 bullet points), style guidance (e.g., in a casual tone), or format (e.g., as JSON). As seen, it does exactly what we needed it to, without any prior context.

Few-shot learning (Example-based prompting)

You show the model a few examples of what you want, then ask it to replicate the pattern.

It teaches the model structure, tone, and nuance. This is ideal when working on domain-specific tasks (like financial insights or marketing briefs), or when your ideal output is hard to define but easy to recognize when you see it.

The best use cases are:

- Weekly report summaries across sales pipelines or marketing assets

- QA tagging for support chats

- Translating business feedback into product ticket

Let’s look at the prompt example:

Here are two customer inquiry responses I've written. Please draft a third response for the refund request following the same empathetic and solution-focused tone.

Example 1 – Feature Request:

Hi Sarah,

Thank you for taking the time to share this suggestion! Our product team is actively exploring bulk export functionality, and your feedback helps us prioritize. I've added your vote to the feature request (FR-847). We'll email you directly when this goes into development.

In the meantime, would a CSV export workaround help? I'd be happy to walk you through it.

Best regards,

Example 2 – Technical Issue:

Hi Marcus,

I'm sorry you're experiencing login issues—that's frustrating. I've checked your account and see the problem: your session expired after our security update.

Here's the quick fix: Clear your browser cache and try this password reset link. You should be back in within 2 minutes.

If this doesn't work, reply to this email and I'll schedule a 10-minute call to resolve it together.

Best regards,

Now write a response for this inquiry:

I purchased your annual plan last week but need to cancel and get a refund. The product doesn't integrate with our CRM as I expected.

It’s ideal to use real outputs as examples. Keep them consistent in tone and length. Always follow up with the exact instruction after examples (e.g., Now write one more like this).

Pro tip: Few-shot prompting works even better when your data is consistently structured. When using data flows from Coupler.io, your examples maintain the same schema across time, which makes pattern recognition far more reliable than inconsistent manual uploads.

Output-focused prompting (Structured description without examples)

In this type of prompting, rather than showing examples, you basically tell the model what the answer should look like.

It works because it gives flexibility while keeping output tidy. Especially useful when batch-processing prompts at scale (e.g., 1,000 product listings) where examples would bloat the prompt window.

The best use cases are:

- Generating product descriptions in bulk

- Writing LinkedIn post variations

- Creating role-based summaries from analytics dashboards

Let’s look at an example:

Write a witty 5-bullet list, each under 12 words, that describes this product.

Be specific about format and tone, and test prompts across current frontier models (GPT-5, Claude Sonnet 4.5, Gemini 2.5 Pro) to check consistency. Each model has slightly different strengths — GPT-5 excels at coding and task completion, Claude at nuanced analysis and long-form reasoning, and Gemini at multimodal tasks.

Questioning frameworks that work

In prompting, the way you frame a question directly influences the model’s logic, focus, and creativity.

The frameworks below are not shortcuts; they are mental models that work across analytics, copywriting, product strategy, and executive decision-making.

Why / how / what if

Use this when you need the model to reason or explore possibilities.

- These questions unlock the rationale behind outcomes or simulate hypotheticals.

- Example:

Why did Jaguar's new branding fail in 2025? - Use case: Marketing analytics, budget strategy, root cause analysis

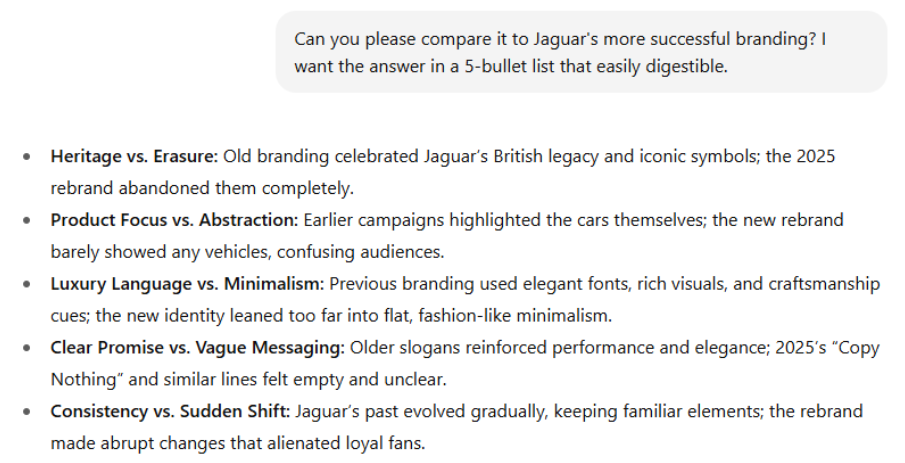

Compare / contrast

Use when clarity is buried in choices. This forces the model to delineate differences, something humans often skip.

- Example:

Can you please compare it to Jaguar's more successful branding? I want the answer in a 5-bullet list that is easily digestible. - Use case: Product strategy, customer segmentation, AB testing

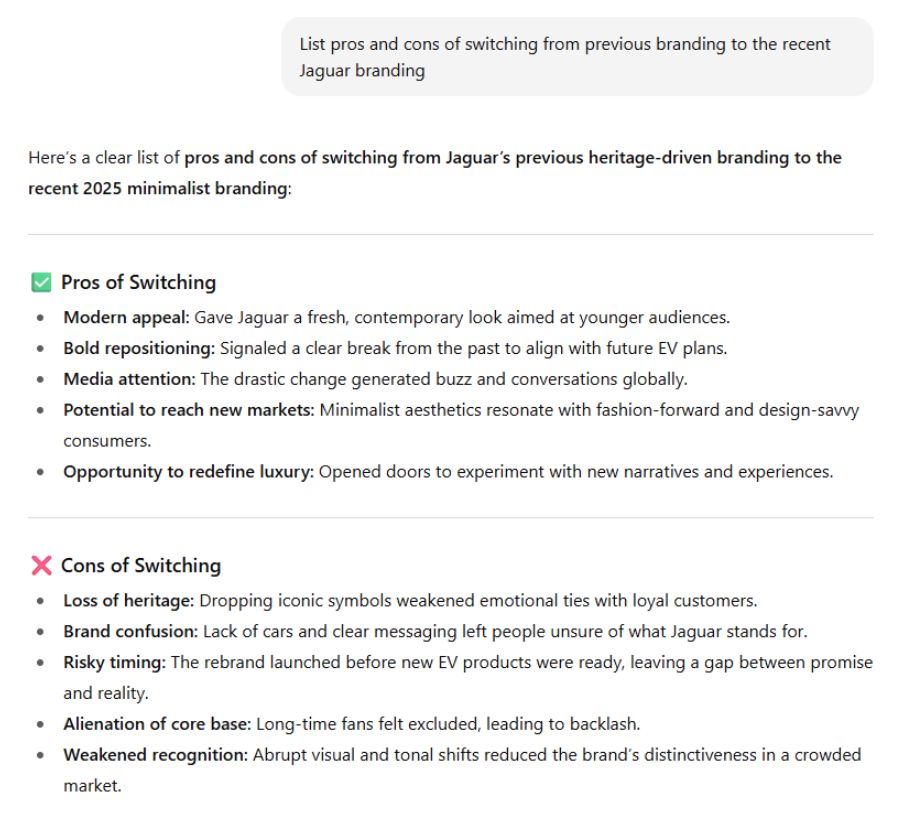

Pros/ cons

Perfect for risk/reward decisions. Helps teams surface blind spots.

- Example:

List pros and cons of switching from previous branding to the recent Jaguar branding. - Use case: Procurement, feature evaluation, change management

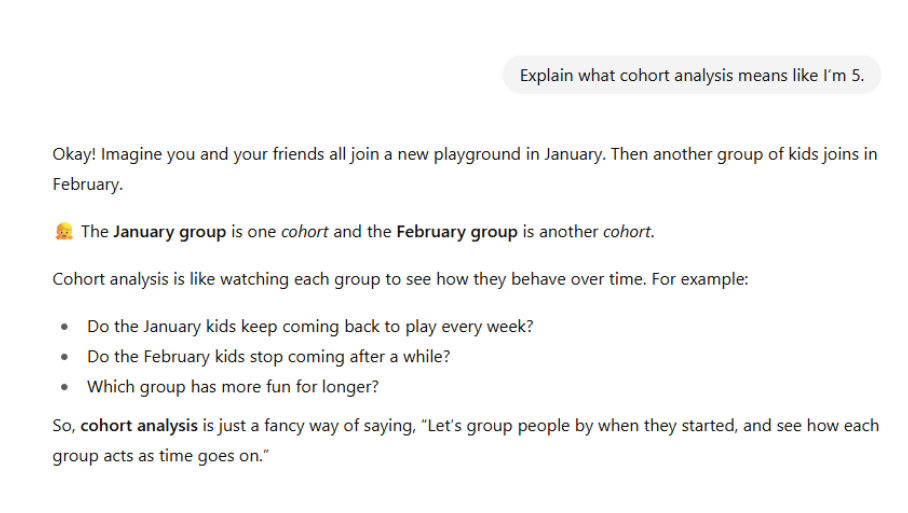

Simplifies complex results for decision-makers or junior staff. You’re clarifying without being condescending.

- Example:

Explain what cohort analysis means like I’m 5. - Use case: Internal enablement, reporting, onboarding

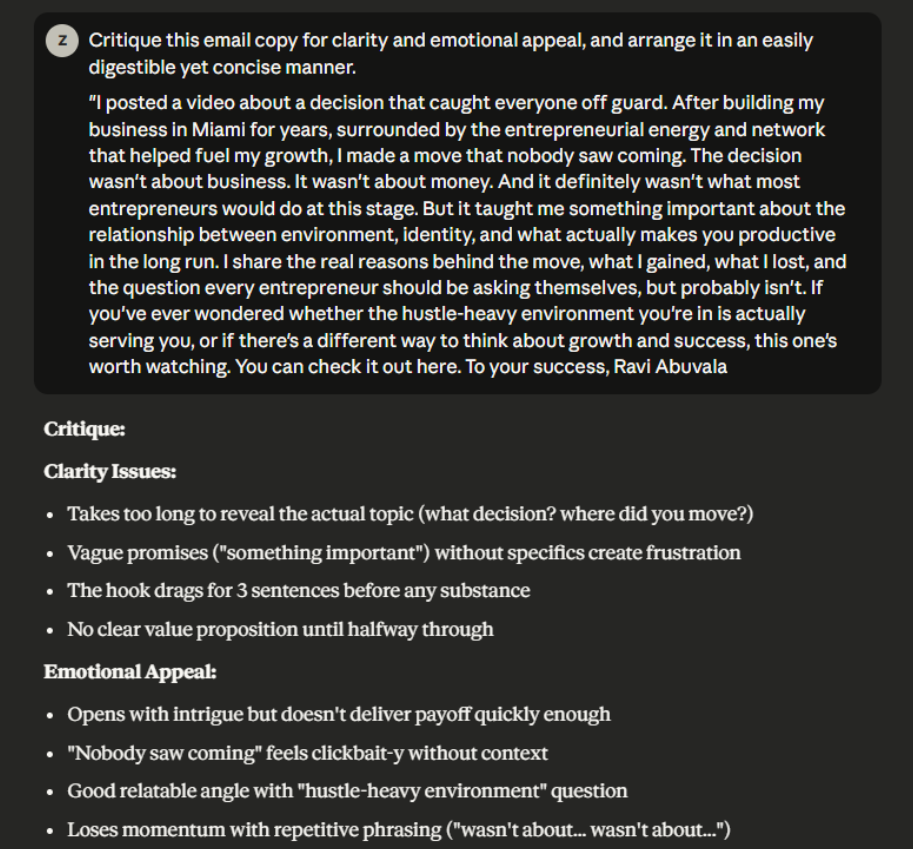

Critique/ improve

Moves the model from passive generation to active revision. Use it to pressure-test outputs.

- Example:

Critique this email copy for clarity and emotional appeal. - Use case: Creative review, QA, customer messaging

These frameworks plug into any kind of task, be it technical, creative, or strategic.

Context setting & priming

A model only knows what you tell it. Use priming to teach it how to think, not just what to say.

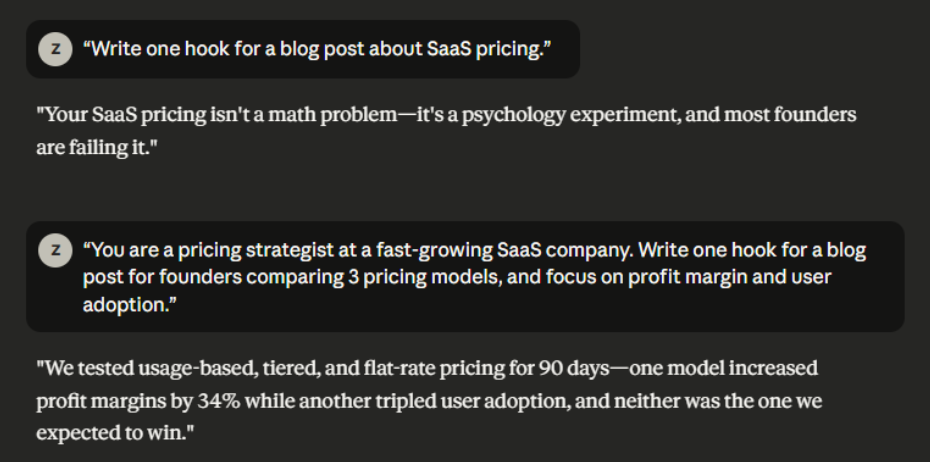

Before:

Write a hook for a blog post about SaaS pricing.

After (Primed):

You are a pricing strategist at a fast-growing SaaS company. Write a hook for a blog post for founders comparing 3 pricing models, and focus on profit margin and user adoption.

That extra context gets you 5x better results.

Now imagine that same prompt, but instead of working from memory, Claude has access to your actual Q3 sales data, competitor pricing sheets, and customer churn rates. This is possible with AI integrations by Coupler.io. You can choose which datasets AI can use for data analysis to shift from an educated guess to a data-backed strategy.

Integrate your data with AI for efficient prompts

Try Coupler.io for freeOutput formatting = Better outputs

What the model says is important. How it structures the output is equally important, if not more.

Format helps both humans and machines:

- Bullet lists for readability

- Headings to structure longer responses

- Markdown or HTML if pasting into CMS

- JSON or YAML if piping into dev workflows

- Tables for comparisons

And if you need a specific tone or language (e.g., American English, conversational style, technical documentation), say so clearly:

Summarize this article in 3 bullet points. Use professional but approachable language, as if explaining to a busy marketing manager who needs the key takeaways quickly.

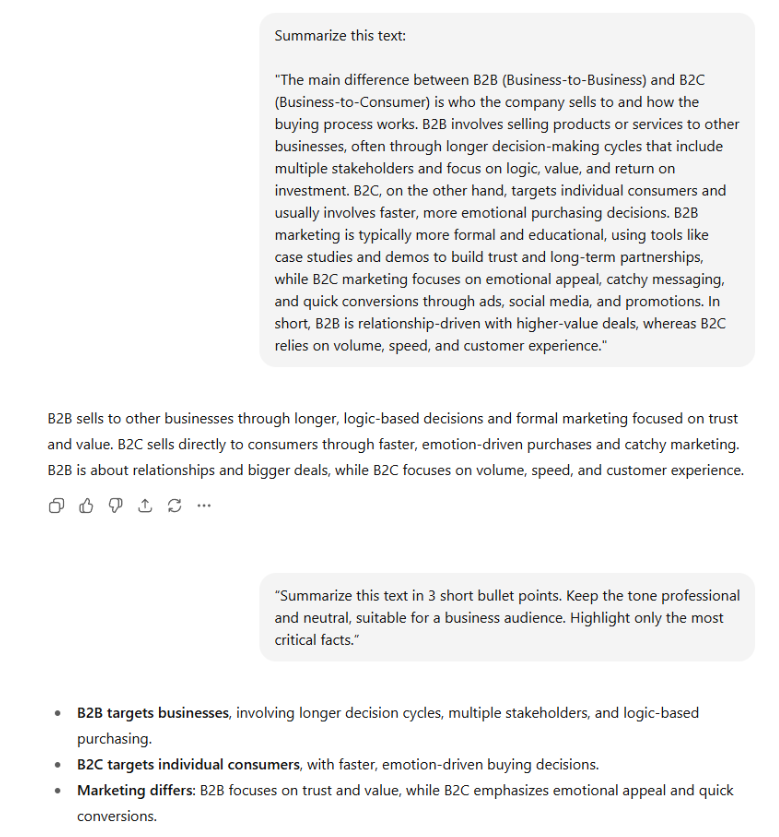

Practical exercise: Make a bad prompt better

We’ve talked theory. Now let’s see it in action. Here’s a real example of a vague, unhelpful prompt, and how to turn it into a great one.

The bad prompt

Summarize this text.

A better prompt

Summarize the following news article in 3 short bullet points.

Keep the tone professional and neutral, suitable for a business audience.

Highlight only the most critical facts.

The difference

The second version is better because it’s concise, structured, and focuses only on the most essential facts. It removes unnecessary details, uses clear professional language, and presents the information in a way that’s easy for a business audience to scan and understand quickly.

My approach focuses on three key areas:

- Add more details: Be more specific about what you want and how you want it delivered

- Add what NOT TO DO: Explicitly state what you want to avoid – this prevents common failure modes

- Add context: Provide background information, constraints, and relevant details about your situation

Sometimes, I need to first get a response to understand what I can improve in the prompt. I get a result and see that it’s not what I need. So, I make changes to the prompt.

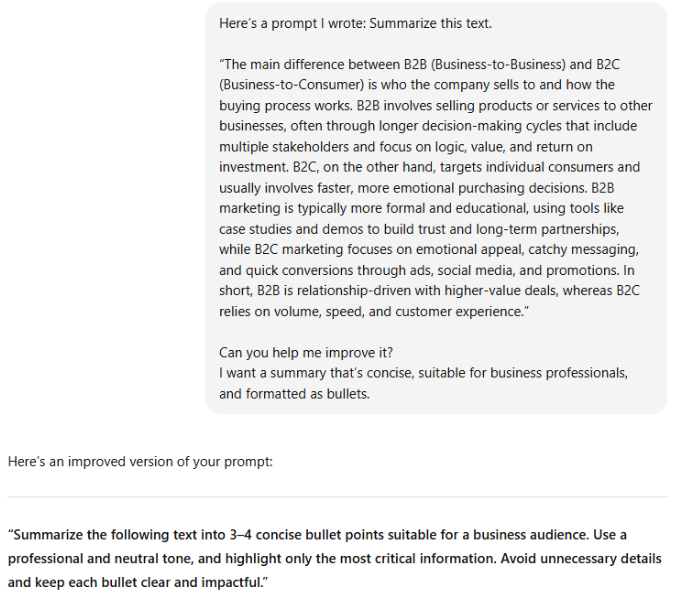

Use AI to improve your prompt (Promptception)

This is the best part. You can literally just ask the AI to help you refine prompts.

Let’s try something:

This not only trains your own prompt muscle but also builds a feedback loop with the model.

Seriously. Treat the model like a collaborator.

Ask it:

What’s missing from this prompt?How can I make this clearer or more targeted?Give me 3 stronger versions.

You’ll be surprised how well it works.

You’ve now mastered the essential techniques, but what happens when you face complex, multi-step challenges that require deeper reasoning?

In the next article, I’m leveling up with advanced prompting techniques such as Chain-of-Thought reasoning, Tree-of-Thought exploration, and recursive refinement techniques that transform what’s possible with AI.